Prof. Dr. Grégoire Montavon

Research Group Lead

Prof. Dr. Grégoire Montavon is a Professor at Charité Universitätsmedizin Berlin and a Research Group Lead in the Berlin Institute for the Foundations of Learning and Data (BIFOLD). He received a Master's degree in Communication Systems from the École Polytechnique Fédérale de Lausanne in 2009 and a Ph.D. in Machine Learning from the Technische Universität Berlin in 2013.

His research focuses on methods and applications of Explainable AI (XAI). A particular goal is to develop approaches that integrate well with state-of-the-art machine learning (ML) models used in medical diagnosis and research, so that these models can be verified and explored for insights.

Together with his colleagues, he contributed the Layer-Wise Relevance Propagation (LRP) method, which efficiently and robustly explains the predictions of popular machine learning models such as deep neural networks.

- 2013 Dimitris N. Chorafas Award

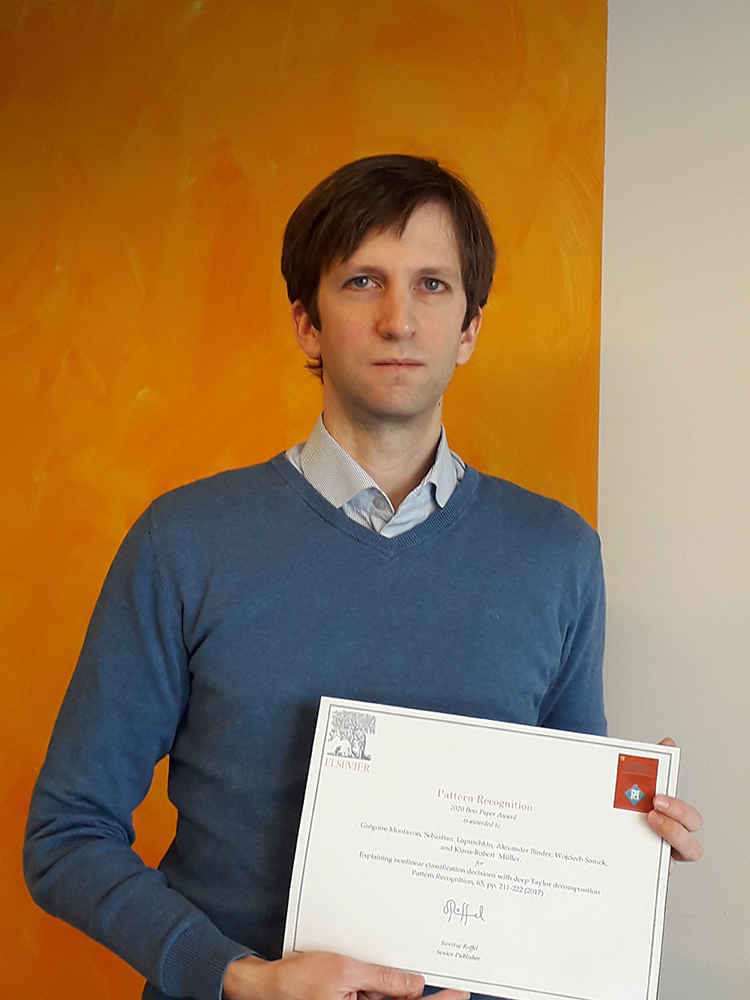

- 2020 Pattern Recognition Best Paper Award

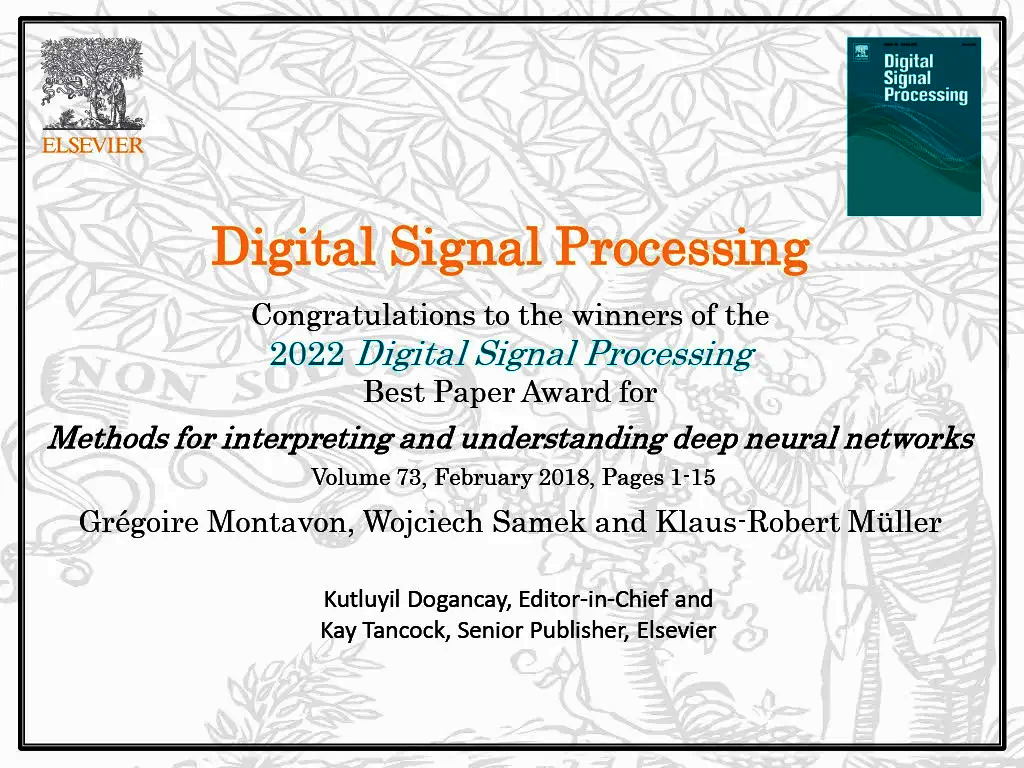

- 2022 Digital Signal Processing Best Paper Award

- Explainable AI

- Machine Learning

- Data Science

Scholar, European Laboratory for Learning and Intelligent Systems (ELLIS), 2021–now

Pattarawat Chormai, Klaus-Robert Müller, Grégoire Montavon

Investigating the Robustness of Subtask Distillation under Spurious Correlation

Pattarawat Chormai, Ali Hashemi, Klaus-Robert Müller, Grégoire Montavon

Distilling Lightweight Domain Experts from Large ML Models by Identifying Relevant Subspaces

Yasser Taha, Grégoire Montavon, Nils Körber

Drainage: A Unifying Framework for Addressing Class Uncertainty

Sidney Bender, Ole Delzer, Jan Herrmann, Heike Antje Marxfeld, Klaus-Robert Müller, Grégoire Montavon

Mitigating Clever Hans Strategies in Image Classifiers through Generating Counterexamples

Philipp Keyl, Julius Keyl, Andreas Mock, Gabriel Dernbach, Liliana H Mochmann, Niklas Kiermeyer, Philipp Jurmeister, Michael Bockmayr, Roland F Schwarz, Grégoire Montavon, Klaus-Robert Müller, Frederick Klauschen

Neural interaction explainable AI predicts drug response across cancers

Photo recap: AI in Medicine Workshop

Charité and BIFOLD hosted the “AI in Medicine” workshop in Berlin (Nov 24 - 25, 2025), bringing together leading experts on clinical AI, gene regulation modelling, medical LLMs and explainable AI to foster interdisciplinary collaboration between research and clinical practice.

XAI 2025: Best Paper Award

Congratulations to BIFOLD researchers Simon Letzgus, Klaus-Robert Müller and Grégoire Montavon, whose publication: XpertAI: uncovering regression model strategies for sub-manifolds won the BestPaperAward at the 3rd World Conference on eXplainable Artificial Intelligence. BIFOLD researchers contributed on several levels to this leading conference on XAI.

ICLR 2025 Conference Contributions

The BIFOLD research groups Machine Learning and Explainable Machine Learning in Medicine will participate in ICLR 2025, contributing eight papers, one blog article, and one talk. The conference is set for April 24-28, 2025, in Singapore.

New BIFOLD professorship at Charité

Welcome to Grégoire Montavon, who is taking up a BIFOLD-Charité professorship on April 1, 2025. The professorship is one of the several planned BIFOLD professorships at Charité – Universitätsmedizin Berlin, the institutional partner of BIFOLD.

The Hidden Domino Effect

A team of BIFOLD researchers discovered that foundation models such as GPT, Llama, CLIP etc., trained by unsupervised learning methods, often produce compromised representations from which instances can be correctly predicted, although only supported by data artefacts. In a Nature Machine Intelligence publication the researchers propose an explainable AI technique that is able to detect the false prediction strategies.

Insight into Deep Generative Models with Explainable AI

The just completed agility project "Understanding Deep Generative Models" focused on making ML models more transparent, in order to uncover hidden flaws in them, a research area known as Explainable AI.

AI improves personalized cancer treatment

Personalized medicine aims to tailor treatments to individual patients. Until now, this has been done using a small number of parameters to predict the course of a disease. A team of researchers from different Universities and BIFOLD has developed a new approach to this problem using artificial intelligence (AI).

Publication Highlight – Pruning Clever-Hans strategies

Hidden Clever-Hans effects can undermine the reliability of AI models. The paper “Preemptively pruning Clever-Hans strategies in deep neural networks” introduces a method that corrects biases in neural networks without prior knowledge of faulty features.

Call for XAI-Papers!

Two research groups associated with BIFOLD take part in the organization of the 2nd World Conference on Explainable Artificial Intelligence. Each group is hosting a special track and has already published a Call for Papers. Researchers are encouraged to submit their papers by March 5th, 2024.

DSP Best Paper Prize

BIFOLD researchers Prof. Klaus-Robert Müller, Prof. Wojciech Samek and Prof. Grégoire Montavon were honored by the journal Digital Signal Processing (DSP) with the 2022 Best Paper Prize. The DSP mention of excellence highlights important research findings published within the last five years.

2020 pattern recognition best paper award

A team of scientists from TU Berlin, Fraunhofer Heinrich Hertz Institute (HHI) and University of Oslo has jointly received the 2020 “Pattern Recognition Best Paper Award” and “Pattern Recognition Medal” of the international scientific journal Pattern Recognition. The award committee honored the publication “Explaining Nonlinear Classification Decisions with Deep Taylor Decomposition” by Dr. Grégoire Montavon and Prof. Dr. Klaus-Robert Müller from TU Berlin, Prof. Dr. Alexander Binder from University of Oslo, as well as Dr. Wojciech Samek and Dr. Sebastian Lapuschkin from HHI.

Using machine learning to combat the coronavirus

A joint team of researchers from TU Berlin and the University of Luxembourg is exploring why a spike protein in the SARS-CoV-2 virus is able to bind much more effectively to human cells than other coronaviruses. Google.org is funding the research with 125,000 US dollars.

An overview of the current state of research in BIFOLD

Since the official announcement of the Berlin Institute for the Foundations of Learning and Data in January 2020, BIFOLD researchers achieved a wide array of advancements in the domains of Machine Learning and Big Data Management as well as in a variety of application areas by developing new Systems and creating impactfull publications. The following summary provides an overview of recent research activities and successes.