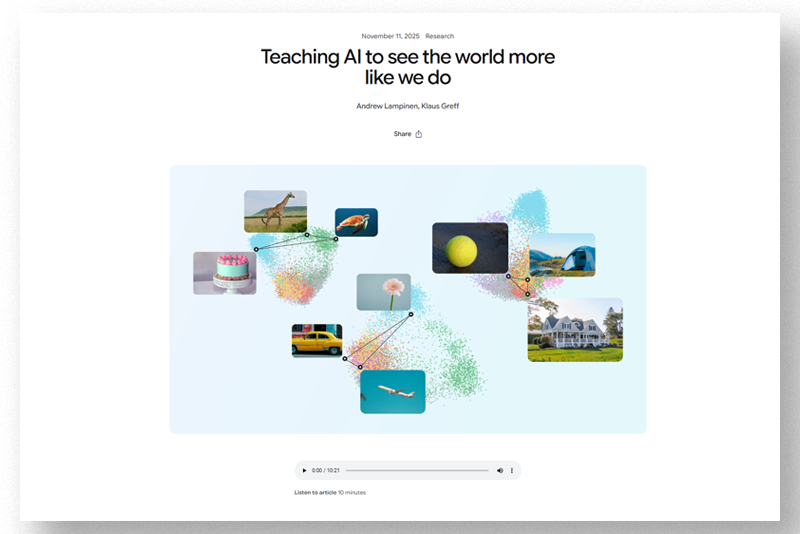

Teaching AI to perceive the world as humans do could change how machines understand reality.

In the field of artificial intelligence, performance has long been the benchmark. Bigger models, larger benchmarks, higher accuracy. But what if we’re chasing the wrong kind of progress? Dr. Lukas Muttenthaler earned his PhD in the Machine Learning (ML) Group at BIFOLD/TU Berlin in April 2025 with summa cum laude. Today, he is a senior machine learning research scientist at Aignostics and a part-time postdoctoral researcher at TU Munich’s Explainable Machine Learning Group. He believes the next leap in AI is not just about performance but about perspective.

My goal is to develop vision foundation models that can handle unfamiliar data, known in machine learning as out-of-distribution data, much like how the human brain adapts to unfamiliar situations. This is crucial for real-world applications such as autonomous driving or medical diagnostics, where both reliability and transparency are key.

This growing research domain, so called representational alignment, sits at the intersection of computer vision and cognitive science. It's not just a technical problem, but also a philosophical one: how can we design machines whose internal representations of the world mirror the way humans intuitively perceive and reason about their environment? One promising approach lies in looking inward: Towards how human brains drive decisions.

Learning from the Human Brain

Lukas’s work draws directly on neuroscience and cognitive psychology, particularly the legacy of AI pioneer Geoffrey Hinton. During his PhD, he was part of a one-year research collaboration between TU Berlin and Google Brain, where he collaborated closely with Hinton's mentee, Simon Kornblith.

Humans are remarkably good at adapting to unfamiliar situations. That kind of flexibility is something current models lack.

Dr. Lukas Muttenthaler earned his PhD summa cum laude in 2025 at TU Berlin under the supervision of Prof. Dr. Klaus-Robert Müller. His thesis, “Representational alignment of humans and machines for computer vision," explores how to align neural networks with human perception, a rare intersection of computer vision and cognitive science. During his PhD, he published a first-author Nature paper, spent time at Google DeepMind, and received an $80,000 Google Research Collabs Grant.

In a landmark paper “Aligning machine and human visual representations across abstraction levels”, Lukas and his colleagues tackled one of the central issues in representational alignment: the mismatch in how humans and machines organize conceptual knowledge. While humans build hierarchies, from specific to abstract concepts, AI models tend to flatten these distinctions. The paper was published in the prestigious journal Nature, a remarkable accomplishment for the first author Lukas, as a doctoral student, and his co-authors.

Their solution? Train a “teacher” model to learn the principles of human judgments and then post-train computer vision models using those human-aligned representations. The result: AI systems that are not only more interpretable but also perform better on unfamiliar or out-of-distribution data.

Beyond Accuracy: Why Alignment Matters

It’s tempting to think that if a model works well, for example, if it demonstrates high predictive performance, then how it works doesn’t matter. But for Lukas, and the ML-team at BIFOLD this couldn’t be further from the truth, especially in high-stakes fields like healthcare and autonomous driving.

Looking to the future, Lukas sees representational alignment not just as an academic problem but as a foundational problem for the next generation of AI. In a world increasingly mediated by algorithms, the ability to trust how machines “think” is no longer optional. Representational alignment may be the key to unlocking not just better AI, but better understanding between humans and machines. And if he is right, it’s time to stop asking what models see, and start asking how they see it.

__

Representational Alignment: is the study of how differently biological and artificial systems perceive, process, and internally “represent” information about the world.

This includes comparing how neural networks and human brains categorize, generalize, or make sense of visual and conceptual inputs.

The goal is to close the cognitive gap between machines and humans, so that AI systems not only perform well, but also think more like we do.

Video: AligNet: Human-Aligned Vision Models for Robust AI (6 min)

Three key works by Dr. Lukas Muttenthaler

Aligning Machine and Human Visual Representations. (Nature, 2025). Introduces a method for fine-tuning vision models using human semantic structures to improve both interpretability and generalization performance.

Getting Aligned on Representational Alignment. (TMLR, 2025). A cross-disciplinary perspective that proposes a unified framework to study representational alignment across AI, neuroscience, and cognitive science.

Improving Neural Network Representations Using Human Similarity Judgments. (NeurIPS, 2023). Develops techniques to align global representation spaces of neural networks with human perception, leading to better performance in few-shot and anomaly detection tasks.

Dr. Lukas Muttenthaler featured in the media