Dr. Thorsten Eisenhofer

Felix Weissberg, Lukas Pirch, Erik Imgrund, Jonas Möller, Thorsten Eisenhofer, Konrad Rieck

LLM-based Vulnerability Discovery through the Lens of Code Metrics

Jonas Möller, Lukas Pirch, Felix Weissberg, Sebastian Baunsgaard, Thorsten Eisenhofer, Konrad Rieck

Adversarial Inputs for Linear Algebra Backends

David Beste, Grégoire Menguy, Hossein Hajipour, Mario Fritz, Antonio Emanuele Cinà, Sébastien Bardin, Thorsten Holz, Thorsten Eisenhofer & Lea Schönherr

Exploring the Potential of LLMs for Code Deobfuscation

Roei Schuster, Jin Peng Zhou, Thorsten Eisenhofer, Paul Grubbs, Nicolas Papernot

Learned-Database Systems Security

Erik Imgrund, Thorsten Eisenhofer, Konrad Rieck

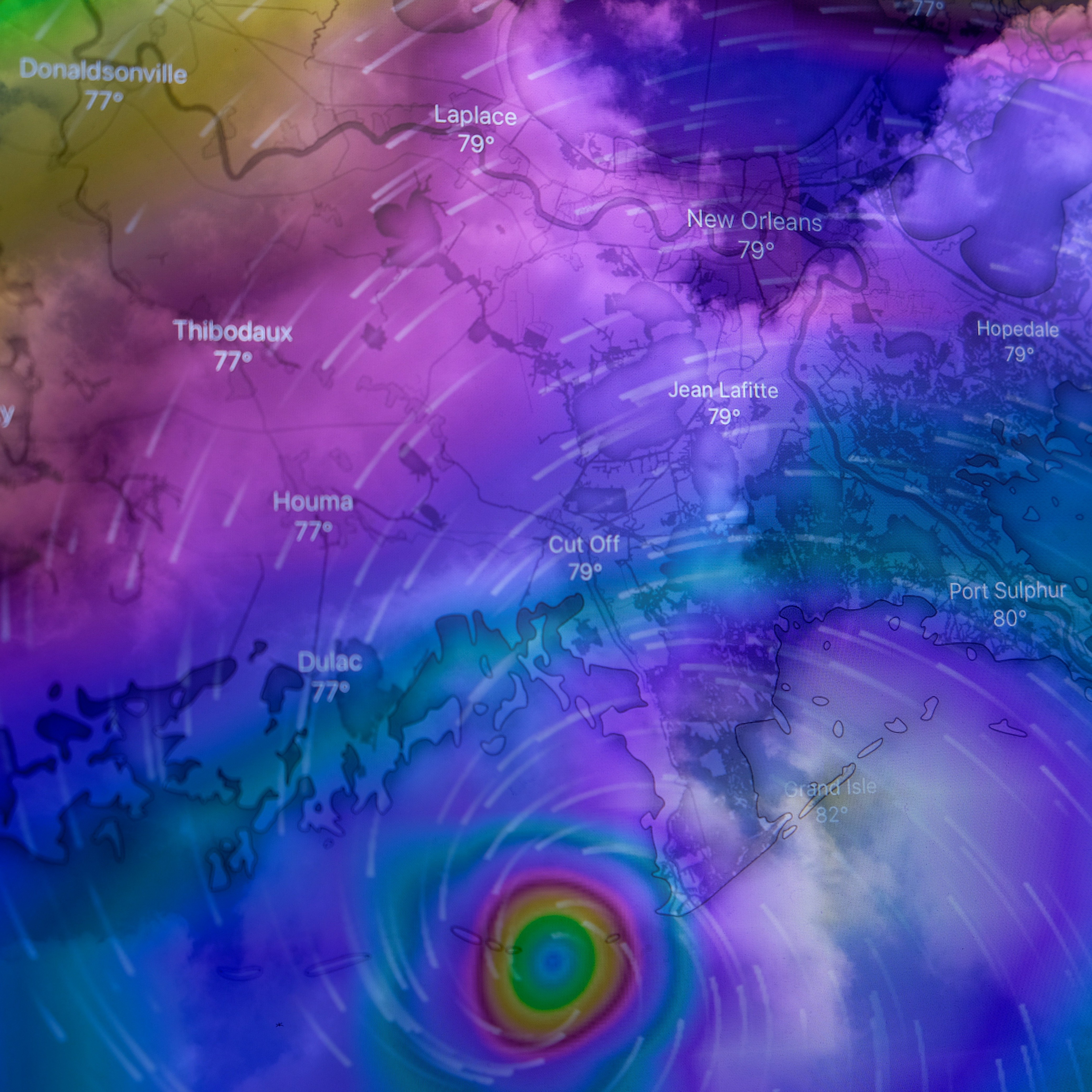

Adversarial Observations in Weather Forecasting

ACM CCS 2025: Distinguished Paper Award

Congratulations to BIFOLD researchers Erik Imgrund, Thorsten Eisenhofer and Konrad Rieck from the ML Sec group, whose paper “Exposing Security Risks in AI Weather Forecasting” received a Distinguished Paper Award at the ACM Conference on Computer and Communications Security (CCS) 2025.

Attacking privacy leaks in virtual backgrounds

Peeking through the virtual curtain: A new study by the BIFOLD MLSEC group reveals that current virtual backgrounds in video calls can leak enough pixels from the environment to reconstruct objects in the background.

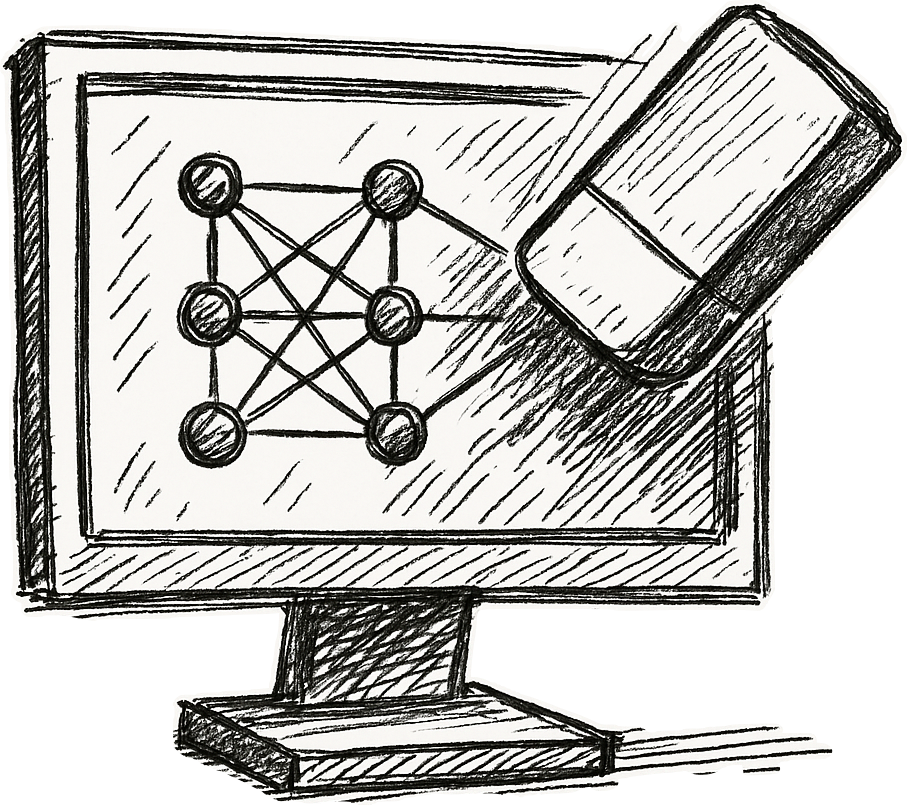

IEEE SaTML 2025 Conference Contribution

Dr. Thorsten Eisenhofer will present the paper “Verifiable and Provably Secure Machine Unlearning,” at SaTML 2025. Eisenhofer is Postdoc in the research group “Machine Learning and Security”. His paper introduces a new framework designed to verify that user data has been correctly deleted from machine learning models, supported by cryptographic proofs.