New research forms a link between human and machine representations

Although so-called Vision Foundation Models, computer models for automated image recognition, have made tremendous progress in recent years, they still differ significantly from human visual understanding. For example, they generally fail to capture multi-level semantic hierarchies and struggle with relationships between semantically related but visually dissimilar objects. In a joint project, with Google DeepMind, the Max-Planck-Institute for Human Cognitive and Brain Sciences and the Max-Planck-Institute for Human Development, BIFOLD-researchers from the Machine Learning group, chaired by BIFOLD Co-director Prof. Dr. Klaus-Robert Müller at TU Berlin have now developed a new approach, „AligNet“, that for the first time integrates human semantic structures into neural image processing models, bringing the visual understanding of these computer models closer to that of humans. Their publication „Aligning Machine and Human Visual Representations across Abstraction Levels“ has now been published in the prestigious scientific journal „Nature“.

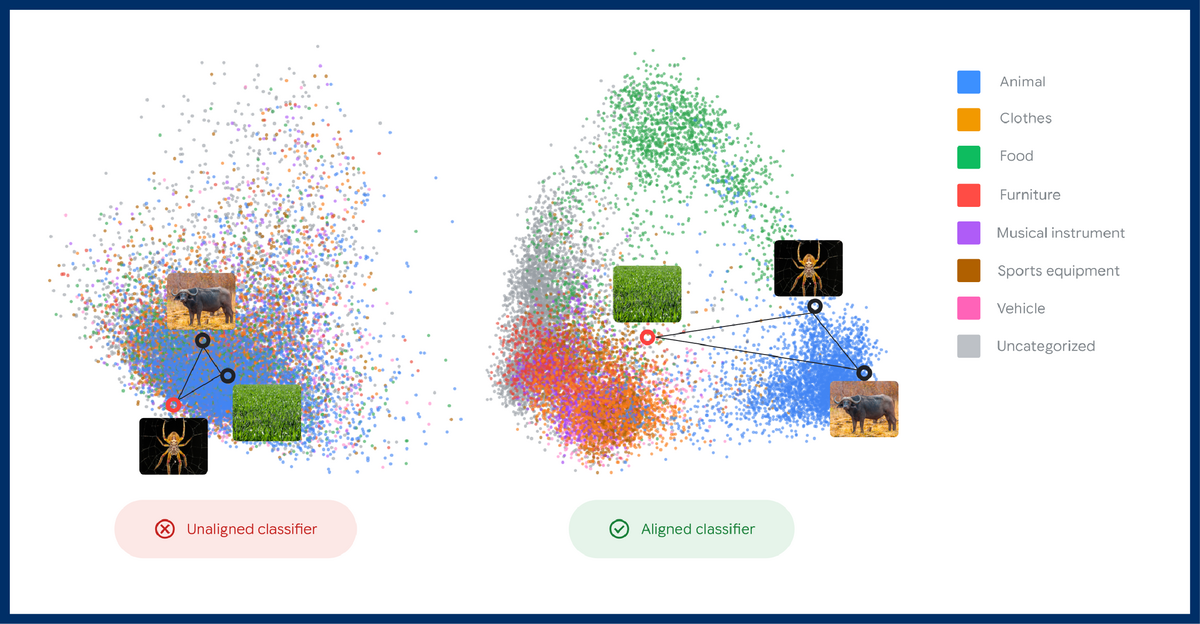

The scientists are investigating how visual representations in modern deep neural networks are structured compared to human perception and conceptual knowledge, and how these can be better aligned. Although artificial intelligence (AI) today achieves impressive performance in image processing, machines often generalize less robustly than humans, for instance, when faced with new types of images or unfamiliar relations.

“The central question of our study is: what do modern machine learning systems lack to show human-like behavior, not only in terms of performance, but also in how they organize and form representations?” explains lead author Dr. Lukas Muttenthaler, scientist at BIFOLD, the Max-Planck-Institute for Human Cognitive and Brain Sciences, and former employee at Google DeepMind.

The researchers show that human knowledge is typically organized hierarchically, from fine-grained distinctions (e.g., “pet dog”) to coarse ones (e.g., “animal”). Machine learning systems, on the other hand, often fail to capture these different levels of abstraction and semantics. To align the models with human conceptual knowledge, the scientists first trained a teacher model to imitate human similarity judgments. This teacher model thus learned a representational structure that can be considered “human-like.” The learned structure was then used to improve already pretrained, high-performing Vision Foundation Models, the so-called student models, through a process called soft alignment. This fine-tuning requires several orders of magnitude less computational cost than retraining the models from scratch.

A crucial step toward interpretable, cognitively grounded AI

The student models were fine-tuned using „AligNet“, a large image dataset synthetically generated through the teacher model that incorporates similarity judgments corresponding to human perceptions. To evaluate the fine-tuned student models, the researchers used a specially collected dataset known as the „Levels“ dataset. “For this dataset, around 500 participants performed an image-similarity task that covered multiple levels of semantic abstraction, from very coarse categorizations to fine-grained distinctions and category boundaries. For each judgment, we recorded both full response distributions and reaction times to capture potential links with human decision uncertainty. The resulting dataset represents a newly established benchmark for human-machine alignment, which we open-scourced,” reports Frieda Born, PhD student at BIFOLD and the MPI for Human Development.

The models trained with „AligNet“ show significant improvements in alignment with human judgments, including up to a 93.5% relative improvement in coarse semantic evaluations. In some cases, they even surpass the reliability of human ratings. Moreover, these models exhibit no loss in performance; on the contrary, they demonstrate consistent performance increases (25% to 150% relative improvement) across various complex real-world machine learning tasks, all at minimal computational cost.

Klaus-Robert Müller “Our research methodologically bridges cognitive science (human levels of abstraction) and modern deep-learning practice (Vision Foundation Models), thus forming a link between the concept of representation in humans and in machines. This represents an important step toward more interpretable, cognitively grounded AI.”

Dr. Andrew K. Lampinen from Google DeepMind adds: “We propose an efficient way to teach computer vision models about the hierarchical structure of human conceptual knowledge. We show that this not only makes the representations of these models more human-like, and therefore more interpretable, but also improves their predictive power and robustness across a wide range of vision tasks.”

Publication:

DOI: https://doi.org/10.1038/s41586-025-09631-6

„Levels“-Dataset: