How subtle imprecision in backend libraries can undermine AI security

Machine learning frameworks like TensorFlow and PyTorch have revolutionized the deployment of neural networks across different platforms - from smartphones to autonomous vehicles and cloud services. They do this by relying on specialized backend libraries that perform the heavy mathematical lifting underlying AI decisions. There exist several of these backends tailored to specific platforms, such as Intel MKL, Nvidia CUDA, and Apple Accelerate. But what happens when these backends, though functionally equivalent, behave ever so slightly differently under the hood? BIFOLD researcher Konrad Rieck and his team presented a paper at ICML 2025 that explores this exact question and reveals a subtle yet important vulnerability: the same neural network can produce different outputs depending on which backend library is used. The research demonstrates that these discrepancies are not just theoretical technicalities — they can be exploited for attacks.

Exploiting tiny differences

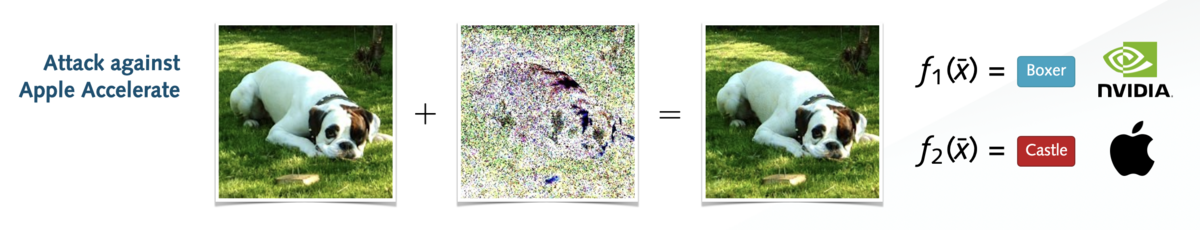

The core contribution of the paper is the concept of so called “Chimera examples”: specially crafted inputs that cause a neural network to produce conflicting predictions solely based on which backend math library is running the computations. “For example, the same image input might be classified as a “cat” when processed on an Intel backend, but as a “dog” when processed using Nvidia’s CUDA library – without any changes to the model itself”, explains Konrad Rieck, who chairs the Machine Learning and Security group at BIFOLD. The reason is that different backends perform mathematical operations in slightly different orders and blocks to improve performance. While these variations usually result in imperceptibly small numerical differences, the researchers show that such tiny changes can be amplified through the layers of a neural network, ultimately resulting in diverging predictions.

Interestingly, Chimera examples can be constructed entirely from whole numbers—like image pixels or count values—just like common data used in many real-world applications. This means that the attack is not only theoretically feasible but practically relevant. A regular image, audio recording, or text document might represent a Chimera example and result in different predictions depending on the backend library used. As AI systems are increasingly distributed across multiple devices and platforms, there is a risk that adversaries exploit this vulnerability to manipulate predictions or hide their traces through conflicting predictions.

“We were surprised by this unexpected attack surface in AI systems. While we were aware of the subtle differences between libraries, we were amazed that they could be controlled so precisely and eventually flip a neural network’s prediction on a specific platform only.” explains Konrad Rieck.

Fighting back: Noise as a defense

In their publication the researchers also turned their focus to the challenge of preventing adversaries from discovering Chimera examples. Constructing defenses in adversarial machine learning is notoriously hard and previous work has repeatedly shown that establishing guaranteed robustness is a tedious and often fruitless task. As a remedy, the researchers developed a defense based on a different principle: they introduce “keyed noise” to each input before it is processed by the neural network. This involves adding a tiny amount of noise, derived from a secret key, that is too small to affect the prediction but makes it hard to detect differences between backends. Experiments show that this approach can significantly reduce the number of misclassifications caused by Chimera inputs without harming overall model performance.

“Defending against adversaries is a constant cat-and-mouse game. While the attack surface we uncovered cannot be easily eliminated, our defense at least makes Chimera attacks significantly harder to carry out in practice,” states Konrad Rieck.

Publication:

Adversarial Inputs for Linear Algebra Backends. Jonas Möller, Lukas Pirch, Felix Weissberg, Sebastian Baunsgaard, Thorsten Eisenhofer, Konrad Rieck.

https://mlsec.org/docs/2025-icml.pdf