Sustainable Federated Learning

Aligning the energy demand with the availability of green energy

The vast majority of today's machine learning applications are centralized, meaning that all required training data are located in a single datacenter. Yet, in real-world scenarios, different parties often want to collectively train a machine learning model without sharing their personal data. “For example, healthcare facilities might want to develop predictive models for patient diagnoses and treatment recommendations without the need to share sensitive patient data”, says Prof. Dr. Odej Kao, chair of Distributed and Operating Systems at TU Berlin and BIFOLD Fellow. For such use cases Federated Learning (FL) has emerged as a distributed alternative to centralized training. In Federated Learning, models are trained directly by collaborators, without the need to transfer any data.

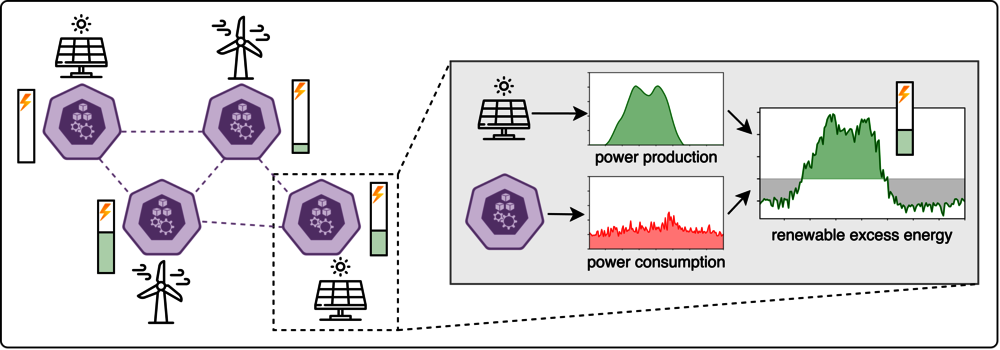

Unfortunately, applying Federated Learning is expected to further increase the power consumption of machine learning, which is already recognized as one of the most energy-intensive computational applications today. However, due to its distributed nature, Federated Learning also offers new opportunities to align this demand with the availability of green energy (see also here).

FL without causing any operational carbon emissions?

BIFOLD researchers Philipp Wiesner, Dennis Grinwald, Pratik Agrawal, Lauritz Thamsen, Odej Kao as well as Ramin Khalili from the Huawei Munich Research Center, took this research question to the extreme by demonstrating how Federated Learning could be performed exclusively on green energy - hence, without causing any operational carbon emissions. For this, they propose a new system, called FedZero, that schedules Federated Learning based on the availability of renewable excess energy. Excess energy, also called stranded energy, occurs if renewable sources such as solar or wind produce power beyond the immediate demand. FedZero uses this energy as well as spare computational resources to power Federated Learning, effectively reducing the training’s operational carbon emissions to zero.

However, scheduling with a limited supply of renewable energy introduces novel challenges - a topic previously investigated by BIFOLD researchers in the context of cloud datacenters (see also here). “In Federated Learning, we additionally encounter the issue of bias: Collaborators with very little access to excess energy may be underrepresented in the final model”, explains Philipp Wiesner. To mitigate this, FedZero dynamically adjusts the pace of training and prioritizes contributors who previously could not participate adequately.

The paper will be presented June 5th, 2024, at the 15th ACM International Conference on Future and Sustainable Energy Systems (e-Energy ‘24) in Singapore.

The Publications in Detail:

- Philipp Wiesner, Ramin Khalili, Dennis Grinwald, Pratik Agrawal, Lauritz Thamsen, and Odej Kao. "FedZero: Leveraging Renewable Excess Energy in Federated Learning" In the Proceedings of the 15th ACM International Conference on Future and Sustainable Energy Systems (e-Energy).2024. https://dl.acm.org/doi/10.1145/3632775.3639589

- Philipp Wiesner, Dominik Scheinert, Thorsten Wittkopp, Lauritz Thamsen, Odej Kao: Proceedings of the 28th International European Conference on Parallel and Distributed Computing (Euro-Par): „Cucumber: Renewable-Aware Admission Control for Delay-Tolerant Cloud and Edge Workloads”; Euro-Par 2022: Parallel Processing, pp 218–232, https://doi.org/10.1007/978-3-031-12597-3_14

- Philipp Wiesner, Ilja Behnke, Dominik Scheinert, Kordian Gontarska, Lauritz Thamsen: Let’s Wait Awhile: How Temporal Workload Shifting Can Reduce Carbon Emissions in the Cloud. Middleware '21: Proceedings of the 22nd International Middleware ConferenceDecember 2021, DOI: https://doi.org/10.1145/3464298.3493399