BIFOLD Fellow Prof. Dr. Konrad Rieck, head of the Institute of System Security at TU Braunschweig, and his colleagues provide the first comprehensive analysis of image-scaling attacks on machine learning, including a root-cause analysis and effective defenses. Konrad Rieck and his team could show that attacks on scaling algorithms like those used in pre-processing for machine learning (ML) can manipulate images unnoticeably, change their content after downscaling and create unexpected and arbitrary image outputs. “These attacks are a considerable threat, because scaling as a pre-processing step is omnipresent in computer vision,” knows Konrad Rieck. The work was presented at the USENIX Security Symposium 2020.

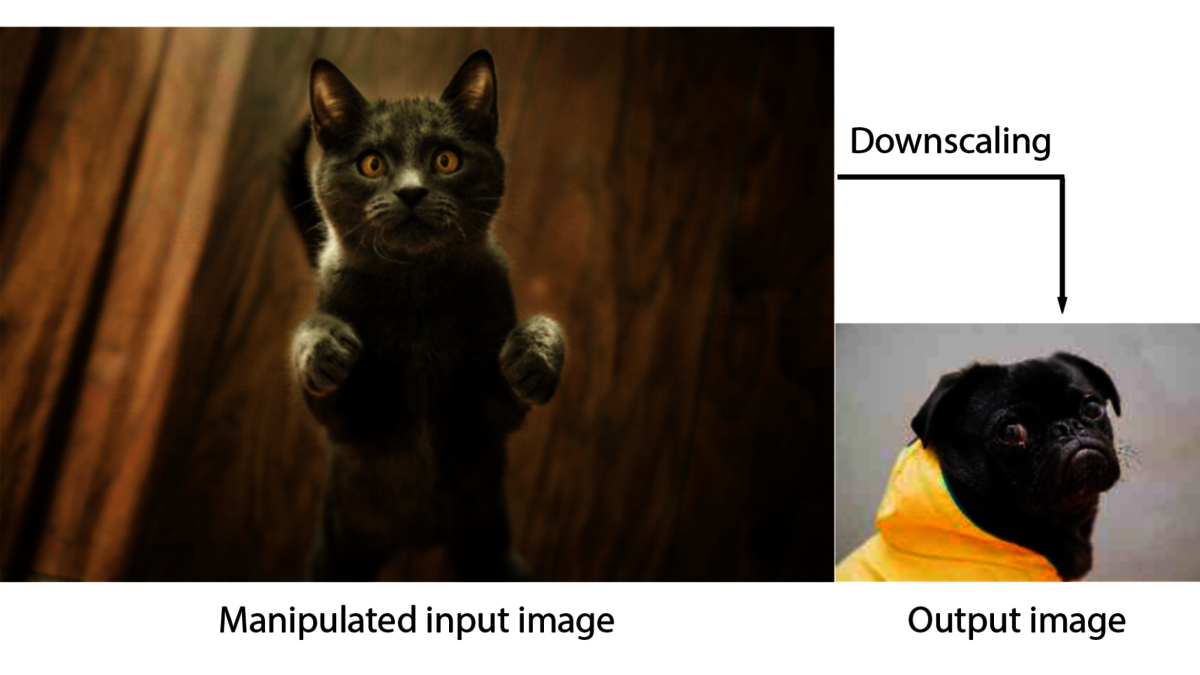

Machine learning is a rapidly advancing field. Complex ML methods do not only enable increasingly powerful tools, they are also entry gates for new forms of attacks. Research into security for ML usually focusses on the learning algorithms itself, although the first step of a ML process is the pre-processing of data. In addition to various cleaning and organizing operations in datasets, images are scaled down during pre-processing to speed up the actual learning process that follows. Konrad Rieck and his team could show that frequently used scaling algorithms are vulnerable to attacks. It is possible to manipulate input images in such a way that they are indistinguishable from the original to the human eye, but will look completely different after downscaling.

The vulnerability is rooted in the scaling process: Most scaling algorithms only consider a few high-weighed pixels of an image and ignore the rest. Therefore, only these pixels need to be manipulated to achieve drastic changes in the downscaled image. Most pixels of the input picture remain untouched – making the changes invisible to the human eye. In general, scaling attacks are possible wherever downscaling takes place without low-pass filtering – even in video and audio media formats. These attacks are model-independent and thus do not depend on knowledge of the learning model, features or training data.

“Image-scaling attacks can become a real threat in security related ML applications. Imagine manipulated images of traffic signs being introduced into the learning process of an autonomous driving system! In BIFOLD we develop methods for the effective detection and prevention of modern attacks like these.”

“Attackers don’t need to know the ML training model and can even succeed with image-scaling attacks in otherwise robust neural networks,” says Konrad Rieck. “Based on our analysis, we were able to identify a few algorithms that withstand image scaling attacks and introduce a method to reconstruct attacked images.”

Further information is available at https://scaling-attacks.net/.

The publication in detail:

Erwin Quiring, David Klein, Daniel Arp, Martin Johns, Konrad Rieck: Adversarial Preprocessing: Understanding and Preventing Image-Scaling Attacks in Machine Learning. USENIX Security Symposium 2020: 1363-1380

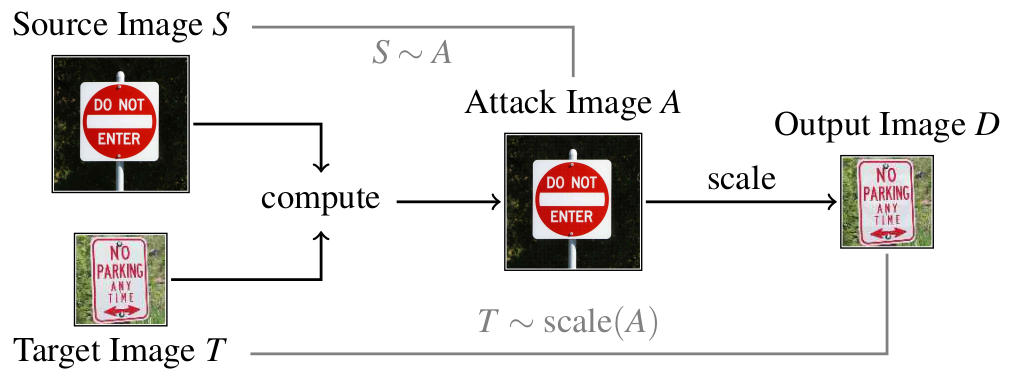

Machine learning has made remarkable progress in the last years, yet its success has been overshadowed by different attacks that can thwart its correct operation. While a large body of research has studied attacks against learning algorithms, vulnerabilities in the preprocessing for machine learning have received little attention so far. An exception is the recent work of Xiao et al. that proposes attacks against image scaling. In contrast to prior work, these attacks are agnostic to the learning algorithm and thus impact the majority of learning-based approaches in computer vision. The mechanisms underlying the attacks, however, are not understood yet, and hence their root cause remains unknown.

In this paper, we provide the first in-depth analysis of image-scaling attacks. We theoretically analyze the attacks from the perspective of signal processing and identify their root cause as the interplay of downsampling and convolution. Based on this finding, we investigate three popular imaging libraries for machine learning (OpenCV, TensorFlow, and Pillow) and confirm the presence of this interplay in different scaling algorithms. As a remedy, we develop a novel defense against image-scaling attacks that prevents all possible attack variants. We empirically demonstrate the efficacy of this defense against non-adaptive and adaptive adversaries.

Machine learning has made remarkable progress in the last years, yet its success has been overshadowed by different attacks that can thwart its correct operation. While a large body of research has studied attacks against learning algorithms, vulnerabilities in the preprocessing for machine learning have received little attention so far. An exception is the recent work of Xiao et al. that proposes attacks against image scaling. In contrast to prior work, these attacks are agnostic to the learning algorithm and thus impact the majority of learning-based approaches in computer vision. The mechanisms underlying the attacks, however, are not understood yet, and hence their root cause remains unknown.

In this paper, we provide the first in-depth analysis of image-scaling attacks. We theoretically analyze the attacks from the perspective of signal processing and identify their root cause as the interplay of downsampling and convolution. Based on this finding, we investigate three popular imaging libraries for machine learning (OpenCV, TensorFlow, and Pillow) and confirm the presence of this interplay in different scaling algorithms. As a remedy, we develop a novel defense against image-scaling attacks that prevents all possible attack variants. We empirically demonstrate the efficacy of this defense against non-adaptive and adaptive adversaries.

In the media:

- Heise online (November 11, 2012): “l+f: Wenn Computer Katzen in Hunde verwandeln”

- TechTalks (August 08, 2021): “Image-scaling attacks highlight dangers of adversarial machine learning”

More information is available from:

Prof. Dr. Konrad Rieck

Technische Universität Braunschweig

Institute of System Security

Rebenring 56

D-38106 Braunschweig