Reflections on ten years of AI research and a look ahead

In 2015, the U.S. Defense Advanced Research Projects Agency (DARPA) launched its program on Explainable Artificial Intelligence (XAI) with the goal of giving users a better understanding of, greater trust in, and more effective control over AI systems. That same year, researchers at the Technical University of Berlin (TU Berlin)/BIFOLD and the Fraunhofer Heinrich Hertz Institute (HHI) developed a method called Layer-wise Relevance Propagation (LRP), the first systematic approach to explaining the decisions of neural networks. Today, XAI methods are not only widely used in research and industry but are also embedded in legal frameworks, such as the "Right to Explanation" in the EU’s GDPR and the transparency requirements in the EU AI Act. With National AI Day on July 16, it's an opportunity to reflect on the past and future of XAI, especially the role played by Berlin institutions like TU Berlin, Fraunhofer HHI, and BIFOLD.

The desire to peek inside the "black box" of AI, to understand what a model has learned and how it makes decisions, is nearly as old as AI itself. But with the rise of deep learning in 2012, the field saw a major shift: models became extremely complex, making it increasingly difficult to understand how decisions were made. Today, large language models (LLMs) with 10, 100, or more billion parameters surpass the complexity of the human brain. Researchers at TU Berlin, Fraunhofer HHI, and BIFOLD have played a central role in shaping the field of Explainable AI from its inception.

Explaining Individual Predictions (2012–2018)

The Layer-wise Relevance Propagation (LRP) method was developed in 2015 by a team led by Prof. Dr. Klaus-Robert Müller, BIFOLD Co-Director and Chair of Machine Learning at TU Berlin, and Prof. Dr. Wojciech Samek, Head of the Artificial Intelligence Department at Fraunhofer HHI, professor at TU Berlin, and BIFOLD Fellow. “Our goal was to make individual model decisions transparent and understandable. The method uses so-called heatmaps to visualize which input features, such as specific pixels in images or words in texts, contributed most to the model’s prediction,” explains Samek. At the time, LRP was the first general method for systematically explaining neural network decisions – and it is still used worldwide. The core idea: the model's decision is propagated backward through the network, assigning higher relevance values to the neurons that contributed most strongly to the outcome. The method is highly efficient and can even be applied to large language models with billions of parameters. In practice, such explanations can reveal flawed behavior: for example, some models were shown to perform well by essentially "cheating" rather than genuinely understanding their tasks. A well-known case: an image classifier learned to detect horses not by recognizing the animals themselves, but by spotting a copyright watermark that appeared frequently in horse images.

Understanding the Model (2018–2023)

The second wave of XAI research aimed to go deeper: not just to explain what a model reacts to, but to understand how it works internally and what concepts it learns. The research teams at TU Berlin, Fraunhofer HHI, and BIFOLD developed several innovative analysis tools. Notable among them are Concept Relevance Propagation (CRP) and Disentangled Relevant Subspace Analysis (DRSA). Building on the principles of LRP, these methods extend it by analyzing not only the relevance of input data, but also the role of individual neurons and substructures within the network.

For example, CRP has been used to visualize the learned concepts in a neural network trained to classify Alzheimer’s disease from quantitative MRI scans. The method was able to identify and visualize the specific features used by the model to distinguish between “diseased” and “healthy” patients, and match them with known disease-affected brain regions. This level of explanation is crucial, not just in medical applications, but in any domain where decisions must be trustworthy and scientifically grounded.

A Holistic Understading (2023–Today)

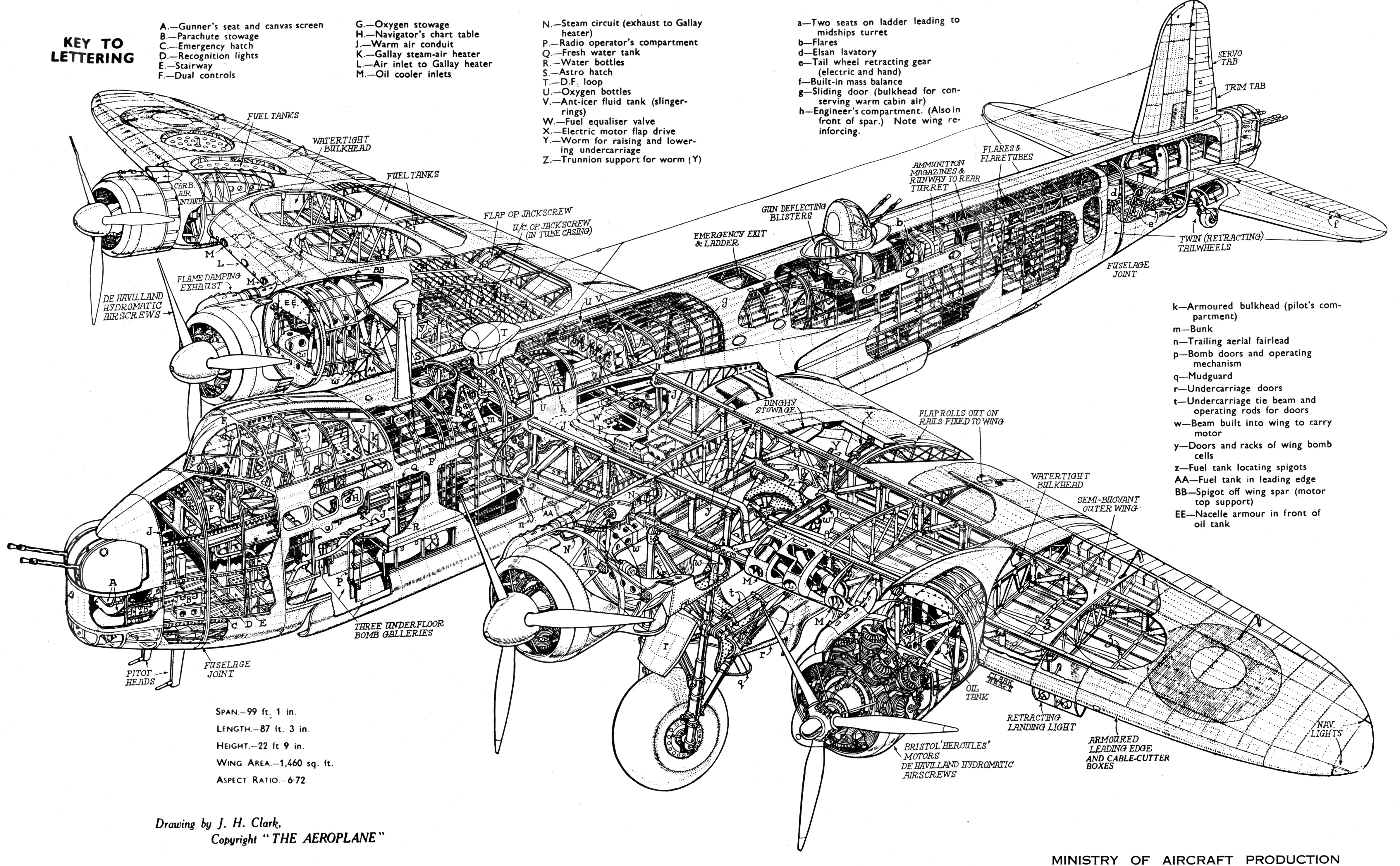

“Right now, the focus is shifting toward a systematic and comprehensive understanding of AI models: their behavior, their internal representations, and how they function,” says Wojciech Samek. “That’s why we recently released ‘SemanticLens’, a system designed to make the role and quality of every component, every neuron, transparent.” Samek compares it to a highly complex technical system like an Airbus A340-600, which consists of over four million individual parts. Aircraft engineers must understand and document the function and reliability of each component to ensure the safety of the entire system. In contrast, the function of individual neurons in neural networks remains largely unknown, making automated testing and reliability assessment difficult. SemanticLens fills this gap, enabling for the first time structured, systematic model analysis and validation. This marks a crucial step toward verifiable, trustworthy, and controllable AI systems, particularly for high-stakes and safety-critical applications.

What Will the Next 10 Years Bring?

“The focus of XAI will shift in the coming years,” predicts Samek. “We’ll move away from purely post-hoc explanations toward interactive and integrative approaches that make explanation a core part of human-AI interaction.” Key questions are emerging: What types of explanations are useful to users in different contexts? What should an explainable interface look like? And how can information flow back from the human to the model – for example, to correct misunderstandings or guide behavior?

Explainability will evolve from being a diagnostic tool to becoming an active control mechanism – a critical development for the safe and responsible use of modern AI systems. Another promising frontier is the use of explainable AI in scientific research: applying interpretable models to generate new insights in the natural, life, and social sciences. Researchers from TU Berlin, Fraunhofer HHI, and BIFOLD have already made important contributions in fields such as cancer research, quantum chemistry, and history.