How can we trust the AI fact-checkers? New research shows the power of explanations.

As AI-powered fact-checkers become more common in newsrooms and social media platforms, the question is no longer if we should use them—but how. A new study from researchers at BIFOLD and the Karlsruhe Institute of Technology (KIT) reveals that the secret to trustworthy AI-supported fact-checking may lie not just in what they say, but how they explain themselves.

The publication of Vera Schmitt and Co-authors was presented at the ACM Workshop on Multimedia AI against Disinformation (MAD’25), which she also co-organizes with leading experts in this filed. In a world grappling with the proliferation of mis- and disinformation, AI systems are increasingly tasked with distinguishing factuality and fabricated content. However, these systems often operate as "black boxes," providing verdicts without clear explanations. For high-stake applications, robustness and effective transparency is inevitable to use AI systems for intelligent decision support.

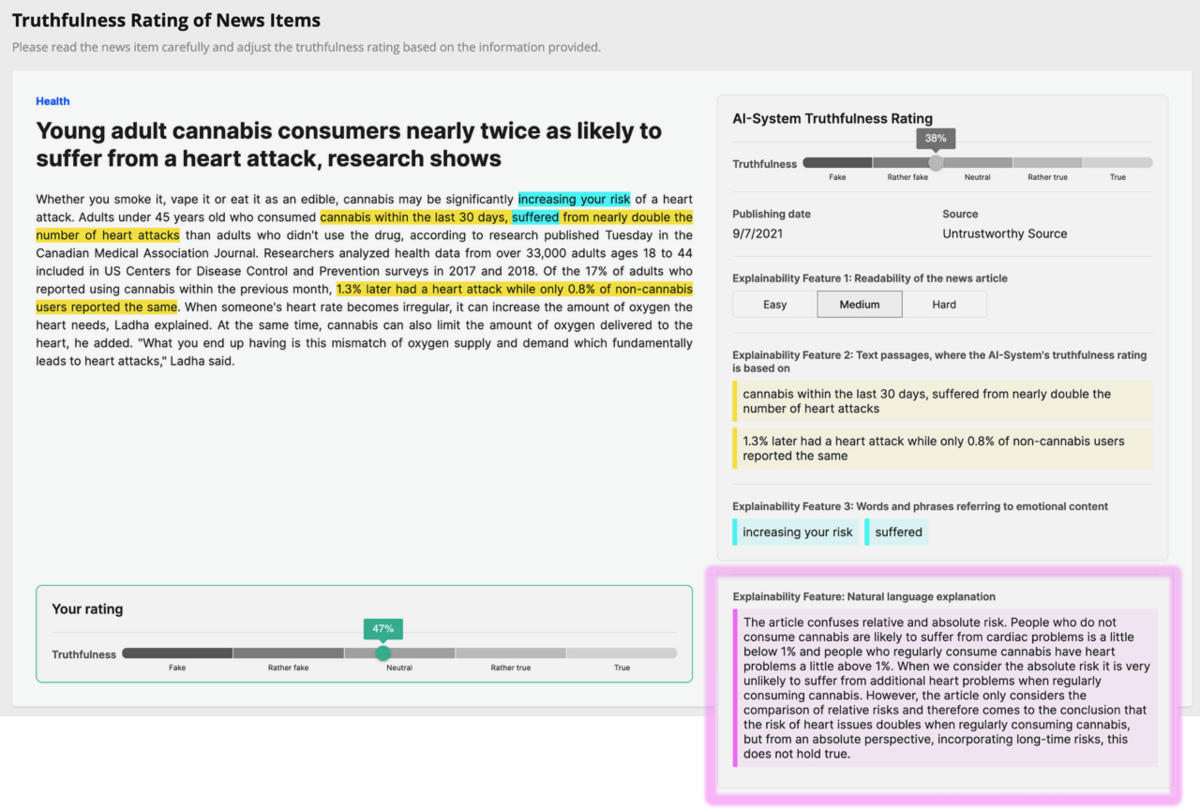

Lead author Vera Schmitt and her team investigated the impact of explainability features in AI systems designed for fact-checking taking a human-centered approach. Their research focused on how these features influence user performance, perceived usefulness, and understandability. To evaluate this, the team utilized a previously developed framework and a fact-checking user interface. They exposed users to various test scenarios, assessing the effectiveness of collaboration between AI systems and both lay and expert users across "rather false," "false," and "true" information contexts. Additionally, some scenarios were manipulated to specifically test for over-reliance on AI recommendations.

They used their experimental setting to answer two questions:

- Can explanations enhance the performance, understandability, and usefulness in a fact-checking system?

- Are the provided information sufficient to determine the truthfulness of the given news items?

Users benefit significantly from explanations

As a result, explanations significantly improved the perceived understandability and usefulness of the fact-checking system. Both salient features and free-text explanations enhanced understandability, with free-text explanations being most effective. All explanation types increased perceived usefulness compared to no explanations, indicating that explanations generally boost the Quality of Experience.

While explanations helped, they did not consistently improve the accuracy of human-AI fact-checking and could sometimes lead to overconfidence. Free-text explanations led to more varied interpretations, suggesting a trade-off between detail and consistency. Users frequently requested additional information, such as details about source credibility, publication context (date, citations), and insights into the AI's reasoning, indicating that the provided information, even with explanations, was often not considered sufficient for a definitive truthfulness assessment.

Based on the findings from this and previous research conducted by Vera Schmitt, she states “that an individualized approach to explanation development is necessary, one that accounts for varying levels of user expertise to achieve effective and truly transparent explanations. This approach necessitates explanations at different levels of abstraction, integrating both interpretability methods and post-hoc XAI approaches to facilitate genuine transparency.”

The publication in detail

Beyond Transparency: Evaluating Explainability in AI-Supported Fact-Checking. Vera Schmitt, Isabel Bezzaoui, Charlott Jakob, Premtim Sahitaj, Qianli Wang, Arthur Hilbert, Max Upravitelev, Jonas Fegert, Sebastian Möller, Veronika Solopova. https://dl.acm.org/doi/10.1145/3733567.3735566

This research extends and builds upon a foundation of prior work, specifically serving as a continuation of a previously developed XAI testing framework (https://dl.acm.org/doi/pdf/10.1145/3643491.3660283, published at MAD 2024). It also incorporates empirical investigations that compared various XAI features, involving over 400 crowdworkers and 27 journalists (https://dl.acm.org/doi/pdf/10.1145/3630106.3659031 published at FAccT 2024). This ongoing research is a collaborative effort with KIT, Tel Aviv University (Prof. Joachim Meyer) and DFKI.