Machine Learning Group contributes three papers and one workshop

Together with their teams BIFOLD researchers will contribute three papers in the field of Machine Learning at the twelfth International Conference on Learning Representations (ICLR). The conference occurs in Vienna from May 7th to May 11th, 2024. The annual event is globally renowned for presenting and publishing cutting-edge research on all aspects of deep learning used in the fields of artificial intelligence, statistics, data science, and neuroscience, as well as critical application areas such as machine vision, computational biology, speech recognition, text understanding, and robotics.

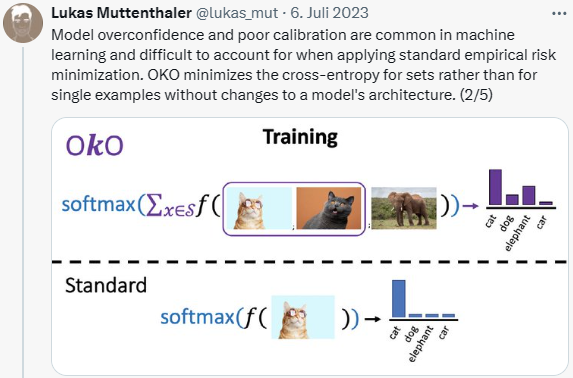

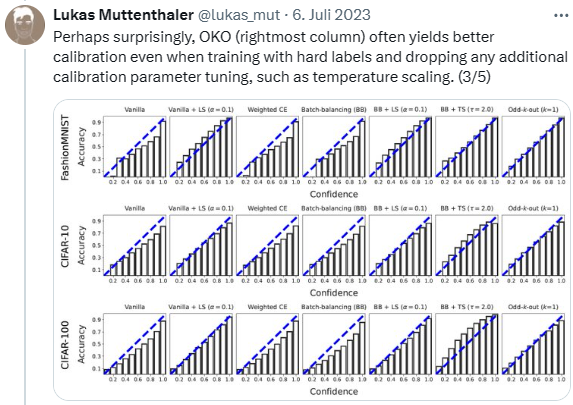

The conference paper “Set learning for accurate and calibrated models” introduces a principled set learning approach for more accurate and better calibrated models. This set learning approach is named OKO. OKO is presented as a user-friendly method that can enhance the calibration and accuracy of machine learning models by changing the way data points are sampled during training and adjusting the computation of cross-entropy. Using OKO is expected to help your machine learning model provide predictions that are not only accurate but also well-balanced and reliable.

Publication Details: Lukas Muttenthaler, Robert A. Vandermeulen, Qiuyi Zhang, Thomas Unterthiner, Klaus-Robert Müller. Set Learning for Accurate and Calibrated Models. Preprint, Github

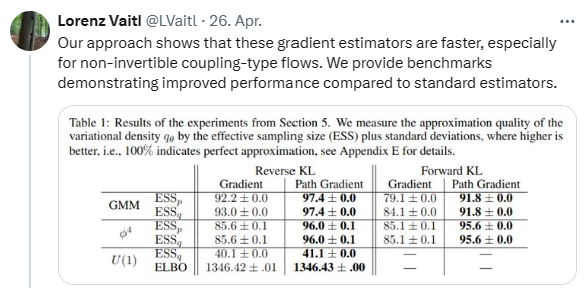

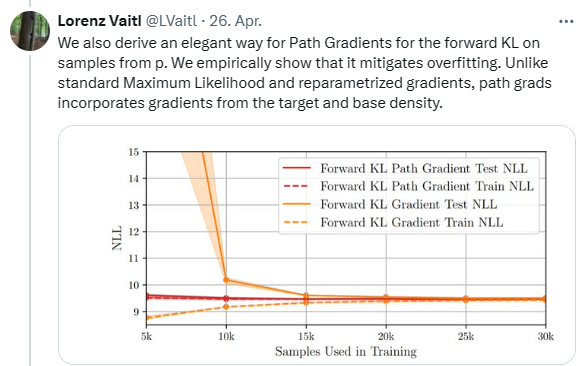

The conference paper “Fast and unified path gradient estimators for normalizing flows” focuses on deriving efficient gradient estimators for coupling-type normalizing flows using the inverse function theorem. Recent work shows that path gradient estimators for normalizing flows have lower variance compared to standard estimators for variational inference, resulting in improved training. However, they are often prohibitively more expensive from a computational point of view and cannot be applied to maximum likelihood training in a scalable manner, which severely hinders their widespread adoption. In this work, the researchers overcome these crucial limitations. Specifically, they propose a fast path gradient estimator that improves computational efficiency significantly and works for all normalizing flow architectures of practical relevance. They then show that this estimator can also be applied to maximum likelihood training, for which it has a regularizing effect as it can take the form of a given target energy function into account. They empirically establish its superior performance and reduced variance for several natural sciences applications.

Publication Details: Lorenz Vaitl, Ludwig Winkler, Lorenz Richter, Pan Kessel. Fast and unified path gradient estimators for normalizing flows. Preprint, Github.

The workshop paper „An Analysis of Human Alignment of Latent Diffusion Models” aims to overcome the limited knowledge transfer between various scientific communities, especially cognitive science, neuroscience, and machine learning. The authors like to provide a theoretical foundation for research on representational alignment across these different disciplines. They propose a unifying framework that can serve as a common language, making it easier for researchers to communicate and collaborate. The paper will be presented at the “Re-Align Workshop”. More information

Publication details: Lorenz Linhardt, Marco Morik, Sidney Bender, Naima Elosegui Borras. An analysis of human alignment of latent diffusion models. Preprint