Analyzing the alignment of Computer vision systems and human mental representations

The field of computer vision has long since left the realm of research and is now used in countless daily applications, such as object recognition and measuring geometric structures of objects. One question that is not or only rarely asked is: To what extent do computer vision systems see the world in the same way that humans do? Today’s computer vision models achieve human or near-human level performance across a wide variety of vision tasks. However, their architectures, data, and learning algorithms differ in numerous ways from those that give rise to human vision.

In their recent paper “Human alignment of neural network representations”, that was accepted at ICLR2023, BIFOLD researchers Lukas Muttenthaler, Jonas Dippel, Lorenz Linhardt and Robert A. Vandermeulen from the research group of BIFOLD Director Prof. Dr. Klaus-Robert Müller together with Simon Kornblith from Google Brain, investigate the factors that affect the alignment between the representations learned by neural networks and human mental representations inferred from behavioral responses.

Does better classification result in more human-like representations?

Computer vision systems become increasingly used outside of research, but the extent to which the conceptual representations learned by these systems align with those used by humans remains unclear. Not least because the primary focus of representation learning research has shifted away from its similarities to human cognition and toward practical applications.

“Our work aims to understand the variables that drive the degree of alignment between neural network representations and human concept spaces. Thereby answering the question if models that are better at classifying images naturally learn more human-like conceptual representations”, explains Lukas Muttenthaler the focus of his work: “Prior work has investigated this question indirectly, by measuring models’ error consistency with humans with mixed results. Networks trained on more data make somewhat more human-like errors, but do not necessarily obtain a better fit to brain data.”

Scaling is not all you need

The BIFOLD researchers approached the question of alignment between human and machine representation spaces more directly. They focused primarily on human similarity judgments, collected from an “odd-one-out” task, where humans saw three images and had to select the image most different from the other two. “To the best of our knowledge, this is the first study that systematically analyses the differences between a variety of different neural networks and human similarity judgments across three large behavioral datasets, and additionally pins down with which human concepts machine learning models struggle” says Lukas Muttenthaler.

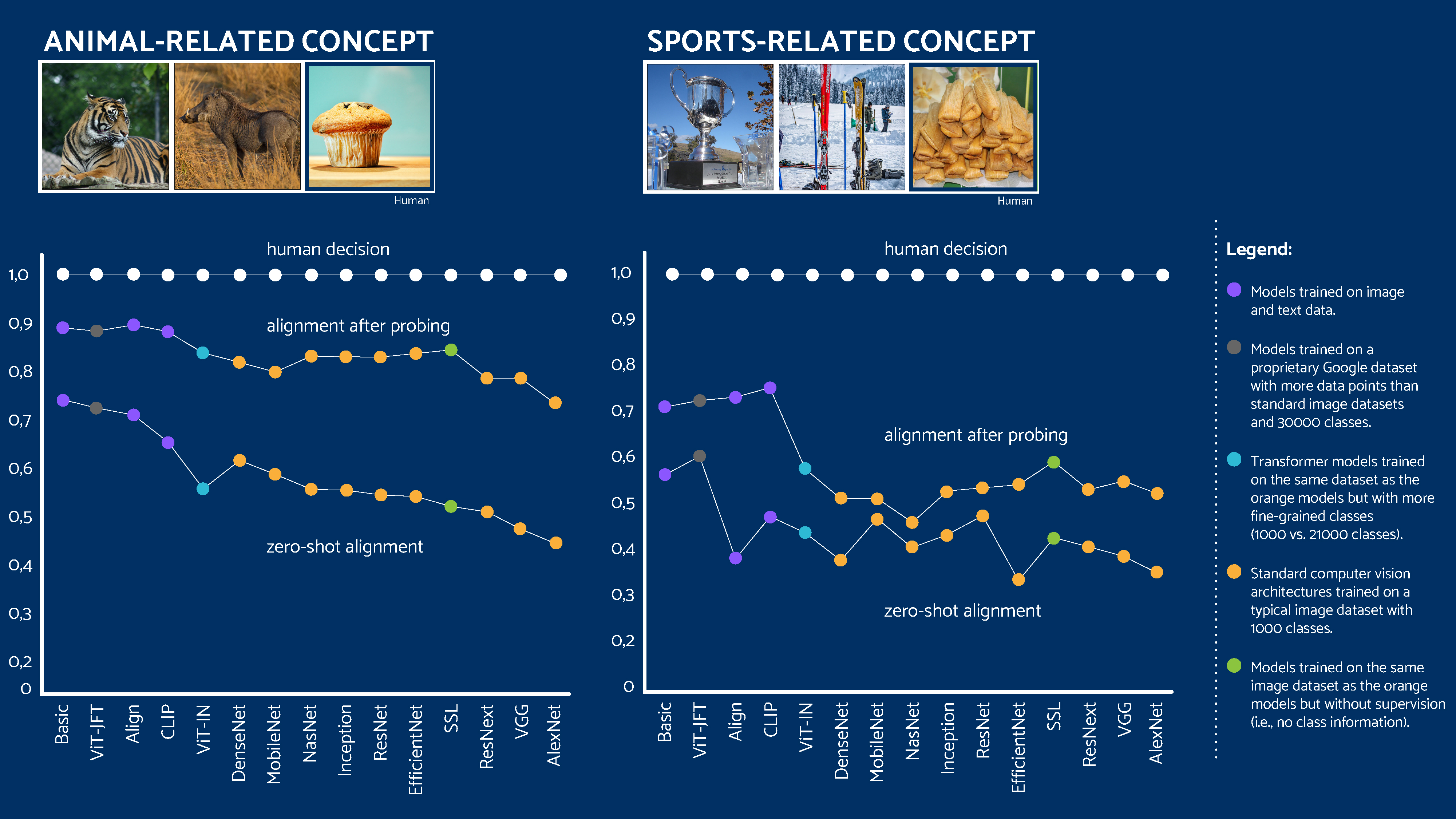

As a result, they found out that scaling the size of models does not lead to better alignment of their representations with human similarity judgments. Differences in human alignment across standard computer vision models are mainly attributable to the objective function with which a model was trained, whereas the specific architecture of a model and its size are both insignificant. Second, models trained on image and text, or more diverse data achieve much better alignment than models trained on standard image datasets. Finally, relevant information for concepts that are important to human similarity judgments, such as animals, can be recovered from neural network representation spaces. However, representations of less important concepts, such as sports and royal objects, are more difficult to recover.

To obtain substantial improvements in the alignment of neural networks with human decisions, it may be necessary to incorporate additional forms of supervision when training the representation itself. Benefits of improving human/machine alignment may extend beyond accuracy on the triplet task, to transfer and retrieval tasks where it is important to capture human notions of similarity.

Human-like representations will be become increasingly relevant

In daily live, many decisions are inherently different from standard image classification tasks, where an image is associated with a single class and all other classes in the data are simply ignored. Humans make choices about many objects simultaneously, which requires a different notion of similarity, something that involves higher-level concepts rather than fine-grained details. “Our research will help scientists across different disciplines (machine learning, cognitive science, neuroscience) to better understand which factors contribute to the alignment between neural network representations and human similarity judgments and to pinpoint relevant variables for designing AI systems that are more human-like. This is getting increasingly important if we use AI systems for image retrieval, self-driving cars, or as chatbots with which we interact on a daily base”, knows Lukas Muttenthaler.

The publication in detail

Lukas Muttenthaler, Jonas Dippel, Lorenz Linhardt, Robert A. Vandermeulen, Simon Kornblith: "Human alignment of neural network representations", Published as a conference paper at ICLR 2023.